TikTok: The dial moves for Data Protection of Minors on Social Media

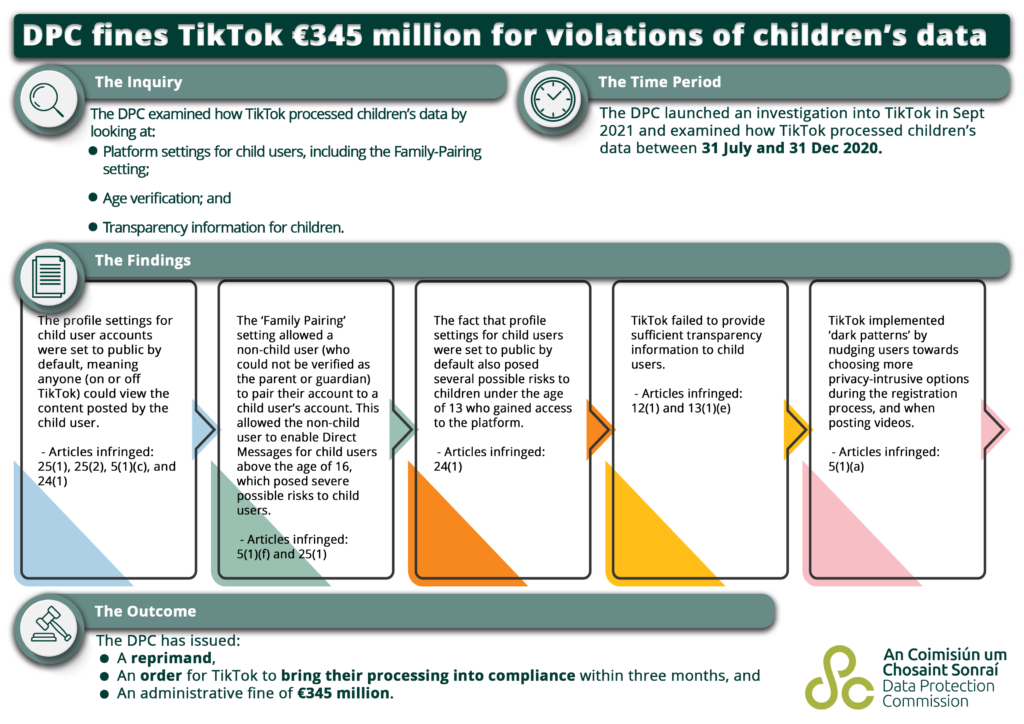

September arrived with a Decision from the Data Protection Commission after an inquiry into one of the biggest social media platforms used by millions of users, including Child users, concluding with a set of corrective measures and insights for data protection professionals. The Irish supervisory authority, in accordance with the binding decision of the EDPB in which the objections of other supervisory authorities were discussed, has imposed 3 corrective measures:

- A reprimand.

- An order requiring TikTok Technology Limited to bring their processing in compliance with GDPR.

- And what always catches the readers’ attention… an administrative fine of €354 million.

But let’s start from the beginning. What was the object of the inquiry? What processing activities were found to be non-compliant and why?

The Background

The DPC initiated in January 2021 an own-volition inquiry against TikTok Technology Limited (hereafter TTL) in relation to some specific processing activities carried out on the personal data of its users, in particular users under the age of 18, who are considered vulnerable data subjects under the GDPR. The temporal scope was limited from 31 July 2020 to 31 December 2020 and the processing activities targeted were as follows:

- Public by default settings.

- Age verification efforts.

- Informing the data subject.

The DPC’s efforts were focused on determining whether and to what extent the controller applied GDPR principles and more specifically whether articles 5, 24 and 25 were applied in processing activities concerning child personal data. As pointed out in the decision, due to the vulnerability of data subjects under 18, the application of the principle of data protection by design and by default, the principle of data minimization and purpose limitation, as well as the accountability principle are crucial to protect the rights and freedoms of data subjects.

The Investigation

While assessing the appropriateness of technical and organizational measures required by article 24 and 25 GDPR, the DPC discussed the purposes of TikTok’s processing activities and the risks of varying likelihood and severity for the rights and freedoms of data subjects.

In its numerous submissions, TTL claimed that the purpose of processing child users’ data was to enable users to disseminate their own content and to show users content that they would likely find of interest, enabling users to express themselves in a creative and engaging way and to participate in multi-cultural engagement, discovering new perspectives, ideas, and inspiration. Even though TTL did a set of DPIAs to assess the impact of the processing activities that were the object of the inquiry, the DPC identified some risks that were not registered or considered.

One of the most relevant risks missed on the assessment was the risk caused by ‘public by default’ settings to Child Users which could lead to Data Subjects losing autonomy and control over their data and, in turn, they could become targets for bad actors, given the public nature of their use of the TikTok platform. This could also lead to a wide range of potentially deleterious activities, including online exploitation or grooming, or further physical, material, or non-material damage where a Child User may inadvertently reveal identifying personal data. The DPC highlighted the risk of social anxiety, self-esteem issues, bullying or peer pressure in relation to Child Users.

The inquiry also observed the Family Pairing setting, which allows intended-parent/guardian users to control some aspects of the intended-child user, such as managing screen time, adding more stringent restriction on available content, etc., while maintaining child users’ individual autonomy by allowing them to disable this feature at any time. According to the DPC, this function arose two data protection concerns. On the one hand, whether TTL applied sufficient organisational and technical measures to verify the relationship between the two account holders, and on the other hand, one of the control measures granted to the parent/guardian user. Generally, such functionalities are intended to protect the child users by allowing the parent/guardian to apply stricter privacy setting, however, this functionality allowed the parent/guardian to enable direct messages for users over 16 which, according to the DPC view it means to apply less strict privacy settings.

Moreover, the inquiry analysed the effectiveness of age verification measures taken by the TTL. When it comes to age verification, the challenge of implementing proportionate and effective measures persist. As accepted by the DPC, the employment of hard identifiers “in order to determine the age of children accessing the platform (…) would be disproportionate, given that children, and particularly younger children, are unlikely to hold or have access to such hard identifiers and this would act to excluding or locking out Child Users who would otherwise be able to utilise the platform”.

Concurrently, the EDPB stressed the need to apply a risk-based approach and held that the measures taken by TTL were not sufficiently effective based to the risk of the processing activities, however, due to the state of art of verification age mechanisms, it was not in the position to conclude that TTL infringe their obligations according to article 25 GDPR.

Finally, the information provided to child users was assessed from the perspective of the principles of transparency and fairness of Article 5 GDPR, according to the objection made by the Berlin Supervisory Authority.

The Key Findings

In a nutshell, the main findings of the DPC were the following:

- Regarding the Public by default setting for child users TTL failed to implement technical and organisational measures to ensure that by default, only necessary personal data were processed and was not able to demonstrate the processing activity was performed according to GDPR (article 5(1) (c), 24 (1) 25 (1) and 25 (2) GDPR).

- Regarding the Family Pairing setting, TTL failed to implement appropriate technical and organisational measures designed to implement the integrity and confidentiality principle in an effective manner and to integrate the necessary safeguards into the processing in order to meet the requirements of the GDPR (article 5 (1) (f) and 25 (2) GDPR).

- Regarding public by default settings for users under the age of 13 that gained access to the platform even the age gate , TTL did not implement appropriate technical and organisational measures to ensure and to be able to demonstrate that the above processing was performed in accordance with the GDPR (Article 24(1) GDPR).

- Regarding the information provided to child users, TTL did not comply with its obligation under the article 13 (1) (e) GDPR, as the scope and consequences of the processing activity were not presented to data subjects in a consistent, transparent and intelligible manner.

- Regarding to the way in with the information was provided, TTL infringed the principle of fairness pursuant article 5(1)(a) GDPR.

What happens next?

As expected, TTL filed an appeal to the Irish High Court on 27 September, so we will see if the court confirm the DCP decision or replace it with other measures.

In addition to imposing one of the most severe fines against tech companies headquartered in Ireland, the DPC decision has provided meaningful insights about the application of GDPR principles when it comes to processing personal data from data subjects under 18. This can be impactful for companies processing child’s personal data to provide them a service, and it may affect our clients in the charity sector.

For those companies that need to process personal data of minors, these are the main take- aways of the Decision read in conjunction with the guidance provided by the DPC in 2021 (Fundamentals for a child-oriented approach to data processing):

- Broad interpretation of the accountability principle: although the controller is not responsible for the risks that a third person may cause to the data subject through the processing activity, controllers may include that risk when assessing the appropriate technical and organisational measures.

- Control of the Data subject: even when the data subject is a vulnerable subject, the controller shall implement appropriate technical and organisational measures that allow them to maintain control over their data protection. The design of a parental control settings cannot allow guardians to apply a less strict privacy settings.

- Floor of protection: age verification mechanisms are still in development, and less intrusive measures to verify the age of the data subject are not 100% effective. In accordance with the state of the art, implementation cost and risk, controllers should apply the most effective measures, and provide default protection to all users.

- Transparency obligation: the principle of transparency is crucial to allow data subject to enjoy their rights and freedoms. When it comes to providing information according to article 12 and 13, controllers must use information which is presented in a consistent, transparent, intelligible and easily accessible form, using clear and plain language. The DPC pointed that, in the TTL privacy policy, terms like public, anyone, everyone were not consistent, transparent, and easily accessible, contrarily they were ambiguous.

- Principle of fairness: the EBPD specify in its binding decision that the way in which the information is presented to the data subject can be contrary to the principle of fairness. For example, when data subjects are presented with options in a way that ‘nudges’ the data subject in the direction of allowing the controller to collect more personal data than if the options were presented in an equal and neutral way’. The EDPB recalls that data processing information and options should be provided in an objective and neutral way, avoiding any deceptive or manipulative language or design.

How we can help

Our long experience with clients in different industries helps us to identify best practices based on the nature, scope, context, and purpose of your business. If you are concerned about protecting the privacy of your youngest data subjects and need advice on the implications of this decision for your business, we are the right people to consult. We can help you develop a compliant data protection framework to deliver a reliable service with data protection by design and by default as the foundation.