The Problem with the Trolley Problem – What are Academics to do when the world really needs them?

People love the Trolley problem. Picture the scenario, You are standing by a train line next to a lever. On your right is a fast-approaching Train (trolley to keep it in the original version) on your left is a fork in the train line. The course which the train line is set to take ends in death for five people, but if you divert the train with that handy lever, it will kill one person. The question is simple do you pull the lever?

This in short is the Trolley Problem. It is intuitive, easy to understand and sometimes fun. You can update it a million times over.

In fact, MIT have done just that with their Moral Machine Engine which tries to crowdsource responses to a plethora of scenarios.

The Trolley Problem is everything which Academia usually isn’t. People enjoy it, can grasp it, play with it. There is no secretive jargon to know, it works as a pub game or even for explaining moral difficulties to kids.

And if that weren’t enough, this thought experiment is actually relevant. Yes, interest in the Trolley Problem has grown significantly lately as manufacturers looking to release driverless cars need to decide how to programme their AI systems for real life trolley problems. Should the driverless car go left and kill one person instead of going straight and killing five people? What if that one person happens to be the vehicles owner?

Earlier this week Vox Media’s Recode Podcast did an episode on the issues involved (The 21st Century Trolley Problem).

In January of 2020 one of the key panels at the Annual CPDP (Computers, Privacy & Data Protection) Conference in Brussels focused on Trolley Problems and autonomous cars. This was a fascinating session with excellent speakers at a key industry conference.

The problem is that driverless cars are not the entirety of the ethical problems with AI. In fact, the Trolley Problem isn’t even the entirety of the ethical problems with Driverless cars. What about the loss of jobs across society for bus drivers? Or the impact on the environment? Or the ways this technology might change how we live together and interact with each other?

These problems aren’t as easy to lay out. One could put an out of work bus driver on one track and the reduced accident rate on the other and have at it. But this seems to miss the point.

The other issue is that these problems distract. That excellent talk on Trolley Problems was put on by the Future of Privacy Forum, a Washington lobbying group backed by Facebook, Google and Microsoft among others. This is an instance of ethics washing. Big tech firms get to advance their agendas with many privacy implications while covering themselves in ethical research. Having this research focus on the narrow and entertaining realm of autonomous vehicles allows us to forget some of the other questions. In this case the larger and more frightening ethical and societal implications of a world dominated by incredibly invasive tech giants to simply fade away.

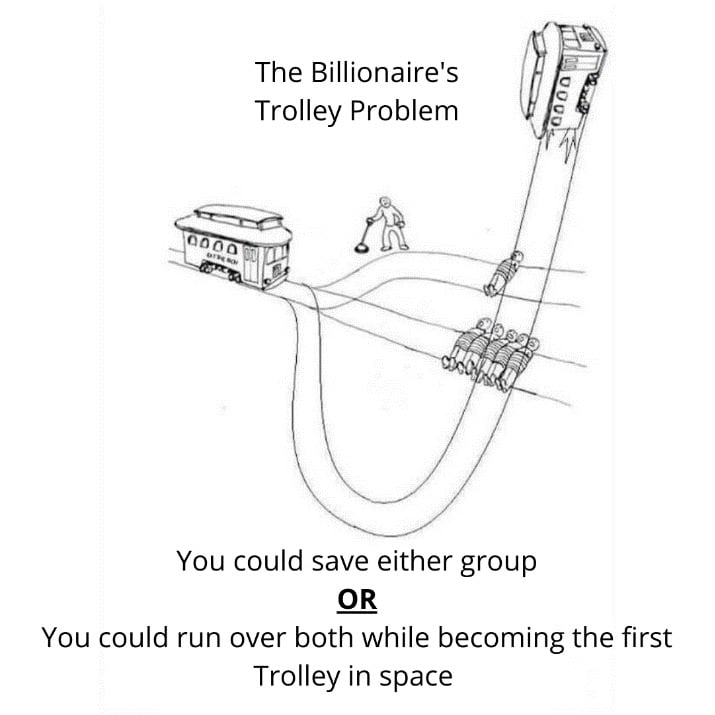

A better use of the Trolley Problem in this case might be:

So when real ethical problems emerge what are we to do. This is when the academic world needs more tools like the Trolley Problem. Even if only to communicate the nature of the situation which we are in.

At a conference in Twente which I attended earlier this month, academics working in the field of ethical AI presented their methodology for Ethical Assessment. It was a long presentation, bringing a full team together and even consisted of a workshop in which the audience had a chance to try out their methodology.

They had stripped out all of what can be considered arbitrary elements from their methodology. Components like risk scoring and weighting of concerns which are not based on objective reality.

The only problem for me is that this methodology wasn’t really a methodology. It felt more like a high school essay prompt “Technology X raises ethical concerns A, B and C. Explain the technology and the concerns, and how you think that the issues should be resolved.”

To borrow from Gertrude Stein when it came to this approach it felt as though “there is no there there”- that is to say the methodology is without method. The outcome is that judging who is carrying out ethical assessments correctly through such a methodology is no simple task.

Yes, risk approaches can be arbitrary, but they can be useful. At Castlebridge we utilise basic techniques for measuring risks and also taking in opinions on how the ethical trade-offs in a situation are understood.

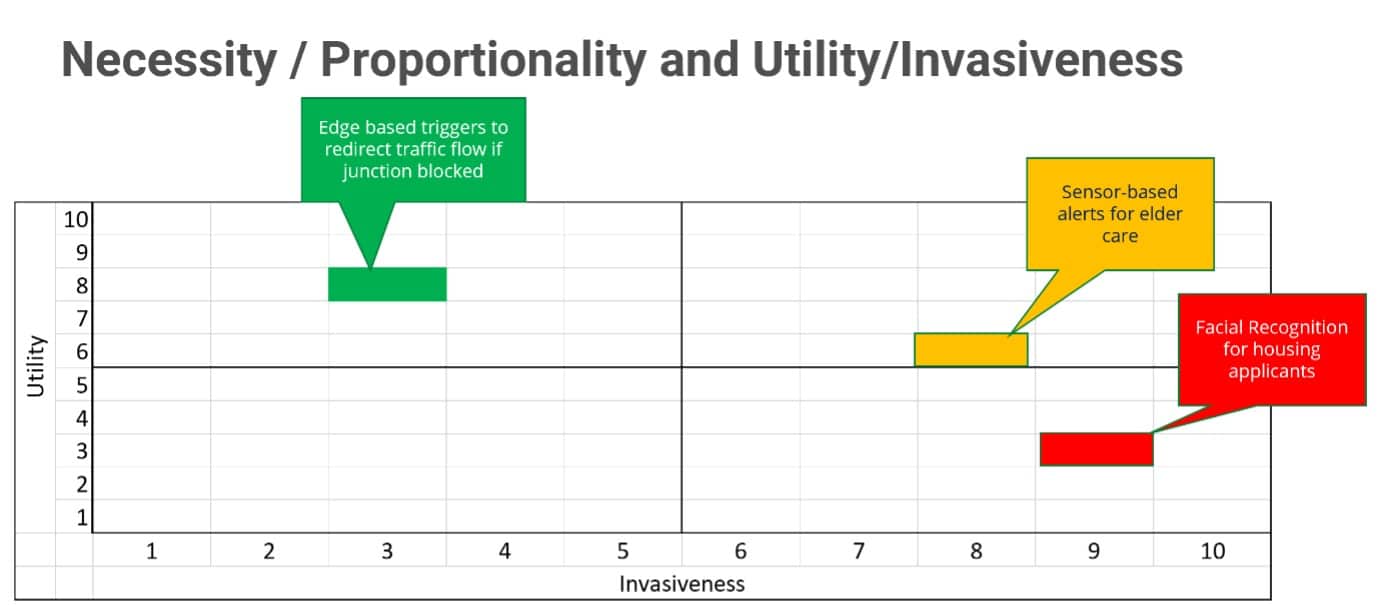

One useful metric is simply a scoring line of Utility vs Invasiveness. We ask people to give an idea/technology/policy a score out of ten for utility and a score out of ten for invasiveness. We lay out the scores on a grid as below:

Some ideas score highly for utility and low for invasiveness, in this case indicated in green. The question to ask here is “why aren’t we doing this?”. Some are very high in invasiveness and low on utility, in this case indicated with red. The question to ask is “Why do this?” Most issues are somewhere in the middle, here indicated in Yellow. The question here is “How can we do this better?”

This is not science, but it is a tool to allow deeper discussions to emerge.

When it comes to scoring risks, we keep a baseline of our risk assessments and attempt to be consistent. We also attempt to reconsider our scoring over time. If we frequently label a risk as having a low instance of occurrence, and it in fact occurs quite regularly then we are forced to update our scoring.

In one of those apocryphal quotes, the economist John Maynard Keynes is meant to have answered a critique with the line “When the facts change, I change my mind. What do you do, sir?”

To be sure the scoring of risks is something that is an art more than a science. But the Trolley Problem hardly belongs in the hard science category either. It is a useful framework that allows us to examine complex issues in a clear way. As the complexities around us grow in unprecedented ways, a few more tricks like this from the world of Academia might just help.

Castlebridge can help you get to grips with any complex data issues you might face. Why not get in touch to see how we can help!