The Contractual Obligation Data Protection Day Post

Yesterday was Data Protection Day (or Data Privacy Day if you want to ignore the origins of the 28th January as a commemorative day in the calendar). Lots of organisations and consultancies have been publishing their take on the “coming wave” of things that we need to worry about in data protection and privacy. I’ve been told by our marketing team that I needed to write something about this too.

So, here’s my controversial take on things, based on my 20+ years at the coal face of data stuff.

The root cause of all the headaches and problems that are coming with emerging technologies and applications for data is the failure to address fundamentals of data management and the habit of technology vendors (and, dare I say it, some consultancies, and even some professional bodies) to stick lipstick on a pig and sell it as something new.

Seriously. How hard is it for organisations to get their heads around the basics of data quality? Turns out, it’s very hard because I haven’t seen the dial shift significantly in the 20+ years I’ve been working in this field. The tools and technologies for mopping up the mess (and indeed making the mess) get technically more impressive every few years, but the fundamentals of business leadership and thinking about data as “thing” that needs to be managed doesn’t seem to have shifted a jot. A colleague (who must remain nameless) discussed this in an email discussion with a group I am involved in and she nailed it:

“Tools don’t solve process problems and process problems are at the heart of failure to get value from data. Tools do even less to solve content problems”

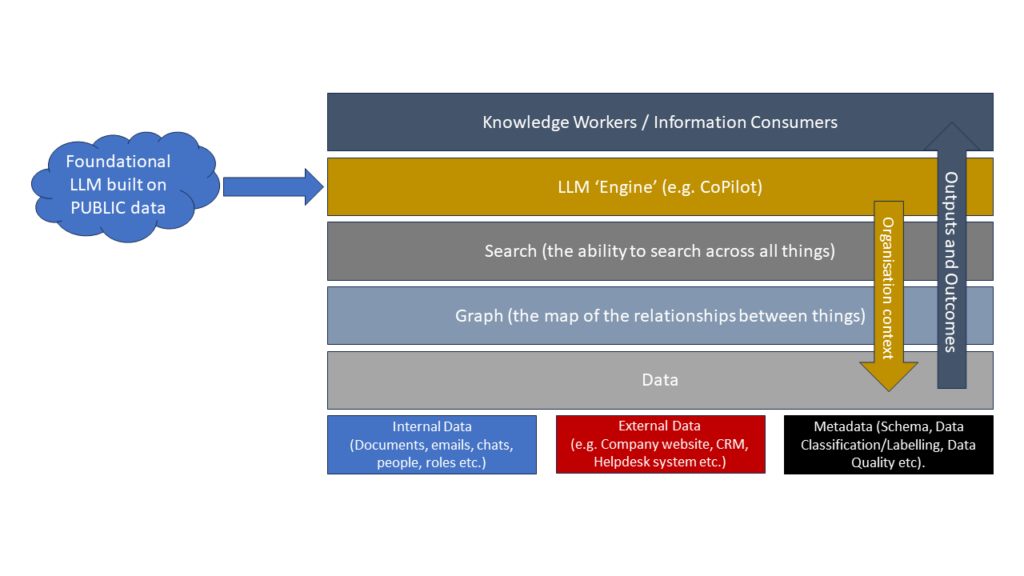

I’ve seen a number of articles and opinion pieces talking about the importance of empowering knowledge workers in organisations to do more with data. But that focus on the knowledge worker in data was something that my friend and mentor Larry P. English was writing and teaching about in 1999. It is worth remembering that Larry was channelling the lessons of Deming and Drucker when he was talking about Knowledge Workers and their role in data management and the “Realised Information Age”. So, none of this is actually “new”.

Guess what.. It. Hasn’t. Happened.

Root cause: the required changes in management behaviour and thinking have not been made. Shortcuts have been adopted, with the conflation of data and technology leading to the “Lure of the Shiny“, where available budgets are applied to speculative technologies that might deliver a benefit at the expense of incremental improvements to data governance, data quality, and regulatory compliance that will deliver a benefit. The reported costs of compliance with (for example) data protection laws are, when we look at them objectively, often just the the governance and technical debt in organisations falling due.

But, what does the data tell us?

- Only 3% of Irish organisations studied have data that is “fit for purpose” (source: UCC/IMI/Dr Tom Redman)

- The cost of non-quality data in organisations is in the range of 15%-25% of turnover (source: various, most recently Tom Redman)

- Organisations that improve data protection governance see a significant reduction in their sales cycles (source: Cisco 2018 Data Privacy Maturity Benchmark)

- Organisations that improve data protection maturity see strong ROI on that investment (source: Cisco 2020 Data Privacy Maturity Benchmark)

The data are clear: addressing these fundamental issues has a significant bottom line and ROI effect. But organisations seem to resist doing anything about it that doesn’t involve some new and shiny technology, often undervaluing and underinvesting in the people and process aspects of information management as they are dazzled by “The Shiny”.

So, as I read the various predictions about trends and issues in data protection and privacy that were being published yesterday, I was struck by how the focus was on emerging issues, risks and threats from new technologies or the mainstreaming of other technologies. I was struck by the fact that these technologies (the “Internet of Bodies” for example) are simply technologies that are gathering MORE data to pile into databases and decision systems that are creaking at the edges due to historic data management deficits. I compared the “life is a lot like Star Trek” promise of some of these technologies with the grim reality of recent client engagements were silo’d Microsoft Access databases and spreadsheets are still the REALITY of data management in many organisations.

I read the discussions of the next iteration of “Chief Data Officer” as a role (a role that did not exist 15 years ago), and wondered when we will see “Plumber 5.0” or “Bricklayer 7.35, Service Pack4” as a job title. After all, the fundamentals of bricklaying and plumbing haven’t changed in millenia, but the fancy new technology for doing those roles surely means we need to do a hard reset on the job descriptions for this age of leaders?

My marketing team tell me I need to make some predictions for what the coming trends will be, so we can be up there with the cool kids. So… here we go…

- Technology will continue to evolve. Academics seeking to demonstrate the commercial potential of technologies they are working with will continue to seek collaborations with industry and will churn out papers saying that Widget X type technologies are an essential enabling technology for the future. However, they will continue to miss the point that, outside of a research lab context, the rules and the data are often complicated and messy.

- Technology will continue to evolve (part 2). Tool vendors (hardware and software) will promote their shiny toys as the solution for things. Just like a particular brand of cigarettes used to make the women swoon, according to their PR teams, the latest technology (built on the leading academic research) will be the fix for what ails ya and will give lots of data to help us inform and shape reality.

- Reality will disagree with all of this and we will have continuing, and growing, problems with data integration and interoperability, with the quality of data, the security of data, and the implications of data use in the real world. The root cause of this will be a failure to consider process issues and wider people issues in the use of data (and I’d include ethics in data management as part of that).

- The technological panacea that academics and technology vendors will have sold us will arrive looking more like a scene from Lord of the Flies meets Blade Runner as the fundamental issues are not addressed and the bad stuff that was happening slowly and on a small scale regarding data protection compliance and other costs of non-quality will be happening at a scale and pace beyond the ability of the organisation’s processes and controls to deal with.

- Faced with this problem, someone will produce another technical solution.

- Legislation will be criticised for not keeping up with emerging technology, when all the time its purpose is to get people focussing on the fundamental issues that are otherwise being ignored.

Until we tackle the fundamental issues of data competency* in organisations, at operational and strategic levels, I fear I will be like Tom Cruise in Edge of Tomorrow, seeing the same mistakes being made until such time as we break the loop. To paraphrase my anonymous friend, the key questions we need to address to make that happen are:

- How do we enable organizations to get value from their data, however they may define “value”?

- How do we prevent the misuse of data, whether intentional or accidental?

- How do we mitigate against downstream impacts and implications of data management decisions?

Until then… the cycle will continue.

*Yes. I know the buzzword de jour is “Data Literacy” but that hasn’t been defined properly or consistently yet (most of the definitions I’ve seen continue to conflate technology and process/human factors) and I’m already seeing “Data Literacy 2.0” being discussed, before we’ve even defined the problem we are solving. Data Competency is my current next-best-alternative-to-an-agreed-term. Work I’m doing with dataleaders.org might change that in the future.